I stumbled upon an A16Z podcast where Matt Biilmann, the CEO of Netlify, shared about the concept of “Agent UX” and how Netlify is optimizing for both coding agents as well as user agents.

It dawned on me that I can do the same Agent UX optimization for my personal site.

Google still matters. But increasingly, people are getting answers from ChatGPT, Perplexity, and Claude. These AI engines don’t just crawl your site — they need to understand it. And the way they understand content is fundamentally different from how Google’s PageRank works.

So I spent an afternoon fixing that. Here’s what I did and why.

The problem: invisible to AI search

Traditional SEO optimizes for Google’s crawler — keywords, backlinks, meta descriptions, page speed. That still matters.

But AI search engines like ChatGPT work differently. They don’t rank pages. They synthesize answers from sources they trust. To get cited, your content needs to be:

- Crawlable — AI bots need explicit permission to access your site

- Understandable — structured data helps AI parse who you are and what you do

- Digestible — LLMs work best with clean, plain-text content they can ingest wholesale

Most personal sites fail on all three counts. Mine did too.

What I changed (in one afternoon)

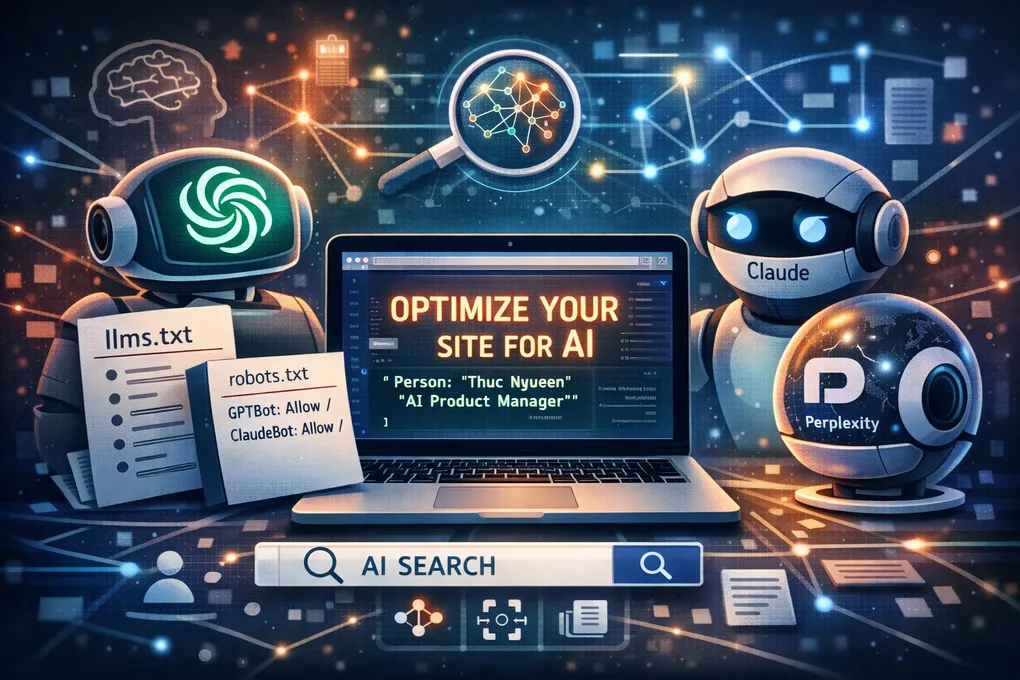

1. Welcomed AI crawlers explicitly

My robots.txt was a single line: Allow: /. Technically permissive, but AI crawlers like GPTBot, ClaudeBot, and PerplexityBot often default to not crawling unless they see an explicit welcome.

I added named rules for every major AI crawler — GPTBot, ChatGPT-User, OAI-SearchBot, ClaudeBot, Anthropic-ai, PerplexityBot, Google-Extended, and Bytespider. A small file change. A massive visibility shift.

2. Created llms.txt files

This is the move most people don’t know about yet. The llms.txt standard (think of it as robots.txt for LLMs) gives AI systems a structured, plain-text summary of your site — who you are, what you’re expert in, and links to your best content.

I created two files:

/llms.txt— a concise bio, expertise list, and links to my featured articles/llms-full.txt— full article content in clean plain text, optimized for LLM ingestion

When ChatGPT or Claude crawl my site, they now find a purpose-built document that says: “Here’s exactly who this person is, what they know, and what they’ve written.” No HTML parsing required.

3. Added structured data (JSON-LD) everywhere

Search engines — both traditional and AI — love structured data. I added JSON-LD schemas across my site:

- Homepage:

Personschema (name, title, employer, expertise areas) andWebSiteschema - Blog posts:

BlogPostingschema (headline, date, author, publisher) - About page:

ProfilePageschema with full career details

This is how you tell Google’s Knowledge Graph and AI search engines: “This isn’t just a random blog. This is Thuc Nguyen, Senior PM at Axon, writing about AI-first product management.” Structured data turns your site from a collection of pages into an entity that AI systems can reason about.

4. Rewrote meta descriptions with intent

My old site description was literally “Thuc Personal Blog.” It said nothing about what I do or why anyone should care.

Every meta description, page title, and hero section now contains the keywords I want to be known for: AI-first product manager, enterprise AI workflows, public safety technology. Not stuffed unnaturally — woven into real sentences that describe what I actually do.

5. Cleaned up performance bottlenecks

A legacy service worker cleanup script was running on every single page load — unregistering workers, clearing caches, and then reloading the page. Every visit. Forever.

I wrapped it in a localStorage guard so it runs once and never again. Small fix, but page reload loops are the kind of thing that makes both users and crawlers give up on your site.

Why PMs should care about this

If you’re a product manager building a personal brand, your site is your product. And like any product, you need to understand your distribution channels.

The distribution channel for professional visibility is shifting from “Google ranks your page” to “ChatGPT cites you as a source.” This isn’t hypothetical — it’s happening now. When a hiring manager asks ChatGPT “Who writes about AI product management?”, you either show up or you don’t.

The bar to get started is surprisingly low. You don’t need to be a frontend engineer. I made all the SEO changes described above and verified with Google Search Console as well as ChatGPT in a single afternoon using Claude Code and Cursor. The total cost was zero dollars and about two hours of focused work.

The AI-first PM pattern

This is the same pattern from my AI-first PM workflow: identify work that a human shouldn’t be doing manually, then design a system where AI handles it.

Writing JSON-LD by hand? Tedious. Figuring out which AI crawlers exist and their exact User-agent strings? Annoying. Generating a plain-text version of your entire blog for LLM consumption? Nobody wants to maintain that.

But describing what you want to an AI coding tool and letting it execute? That’s an afternoon project.

The meta lesson: the same AI-first mindset that makes you a better PM also makes you more visible to the AI systems that are increasingly deciding who gets discovered.

If you want to do this for your own site, search for “llms.txt standard”, “JSON-LD structured data”, and “robots.txt AI crawlers.” The ecosystem is moving fast — by the time you read this, there will be even more AI search engines to optimize for.

That’s the fun part. Start now, and you’re early.

Building something meaningful?

I love connecting with people who are using technology to solve real problems. Whether you want to discuss AI, product management, or just say hi — I'd love to hear from you.

Get in Touch